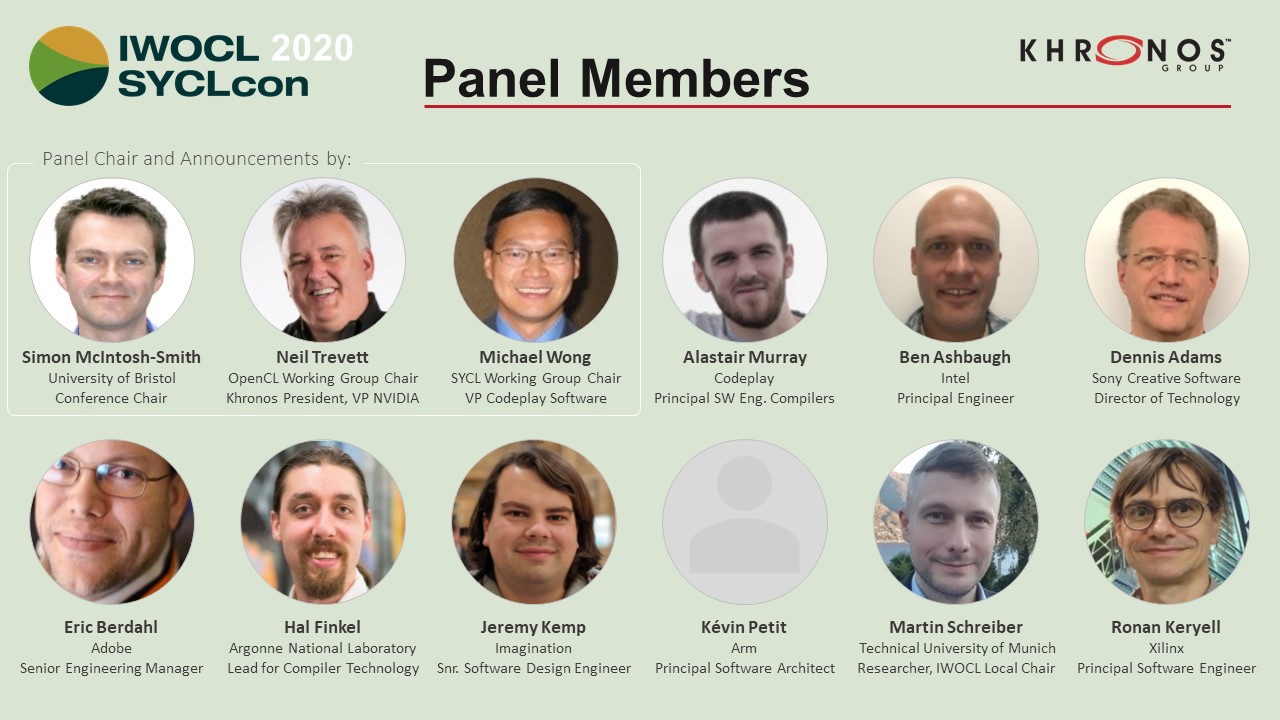

This live webinar took place on April 28 and featured some significant announcements and updates from the Khronos Group on both OpenCL and SYCL. These were followed by an open panel discussion, a session that is always a favorite with our audience. This lively and informative session put leading members of the Khronos OpenCL, SYCL and SPIR Working groups on our ‘virtual stage’ alongside experts from the OpenCL development community to debate the issues of the day and answer questions from the online audience.

A “Virtual” Welcome to IWOCL & SYCLcon 2020

This year we had a record number of submissions. The quality was very high and competition was fierce in all categories; research papers, technical presentations, tutorials and posters. The following submissions were accepted and thanks to all our authors for creating the following video presentations. The slack workspace accompanying the event will be open until at least the end of May if you would like to discuss any of the submissions. Register to Join the Slack Workspace.

Simon McIntosh-Smith, General Chair. University of Bristol.

Martin Schreiber, Local Co-Chair. TUM

Christoph Riesinger, Local Co-Chair. Intel

Presentation Slides – (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides – Pending

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides (pdf)

Program of Talks: Posters

Presentation Slides (pdf)

Presentation Slides (pdf)

Presentation Slides – Pending

Poster (PDF)

Presentation Slides (pdf)

Presentation Slides – Pending

Presentation Slides – Pending

Presentation Slides – Pending

Presentation Slides (pdf)

Presentation Slides (PDF)

Presentation Slides – Pending

Presentation Slides (pdf)

Presentation and Slides – Pending

Presentation and Slides – Pending